"All aircraft designers once learned to make paper airplanes."

Someone Smart

Someone Smart

This article shows my journey of developing a simple captcha resolver. By repeating the steps described, you will be able to get acquainted with the technologies and create your own recognizer for experimentation and study. Some specific development points have been omitted to simplify the material in the article. It should be noted that I am not an ML specialist and very superficially familiar with machine learning technologies. I developed this captcha recognizer out of curiosity, driven by an interest in understanding how the learning process works. So I would be very grateful for advice and comments from those who understand this.

There are numerous parameters mentioned in the article that I don’t explain in detail. The goal of this approach is to make the article shorter and simpler while encouraging readers to experiment with the parameters themselves. I have used the simplest algorithms possible to keep the article concise and accessible. The recognizer you will end up with may not be very efficient, but it will function, and you’ll have the opportunity to improve it yourself.

In this project I used libraries and modules:

- Numpy

- OpenCV

- Scikit-Learn

- Matplotlib

Source code of some tools and functions you can find in the repository.

More examples and methods of the ML framework can be found on the official scikit-learn page.

Step 0. Preparing labeled captchas

For the learning process, you need labeled captchas. "Labeled" means that every captcha must have its true solution, for example, by having the solution embedded in the file name. Generating a large number of labeled captchas is important for better results; however, it often increases the amount of manual work required, as you will need to verify the data at various steps.

To create an effective dataset, we start by generating around 15,000 labeled captchas. The first step is to create an image and print the text onto it, as demonstrated in the following code snippet:

import os

from typing import NamedTuple

import random

import numpy as np

from PIL import Image, ImageDraw, ImageFont

class Letter(NamedTuple):

letter: str

font: ImageFont

width: int

height: int

image_width, image_height = 250, 100

canvas: Image = Image.new('RGB', (image_width, image_height), color='white')

draw: ImageDraw = ImageDraw.Draw(canvas)

letters: list[Letter] = []

captcha_text: str = ''.join(random.choices('abceghkmoprstwxy', k=random.randint(5, 7)))

for char in captcha_text:

font: ImageFont = ImageFont.truetype(

os.path.join(os.path.dirname(__file__), 'ttf', 'FreeMonoBold.ttf'),

size=random.randint(35, 55)

)

_, _, width, height = draw.textbbox((0, 0), char, font=font)

letters.append(Letter(letter=char, font=font, width=width - 2, height=height))

x: int = (image_width - sum((a.width for a in letters))) // 2

for letter in letters:

y: int = (image_height - letter.height) // 2 + random.randint(-7, 7)

draw.text(xy=(x, y), text=letter.letter, fill='black', font=letter.font)

x += letter.width

image: np.ndarray = np.array(canvas)

Afterward, noise is added to the image to simulate real-world conditions, enhancing the complexity of the captchas:

for _ in range(1000):

x: int = random.randint(0, image.shape[1] - 1)

y: int = random.randint(0, image.shape[0] - 1)

c: int = random.randint(0, 255)

image[y, x] = np.array([c, c, c])

Next, we apply a wave effect to further distort the text, making the captchas more challenging to read:

import math

height, width, _ = image.shape

result: np.ndarray = np.zeros_like(image)

x_shift: int = random.randint(0, 19)

y_shift: int = random.randint(0, 39)

for y in range(height):

for x in range(width):

sx: int = round(x + 3 * math.sin(math.pi / 20 * (y - x_shift)))

sy: int = round(y + 5 * math.sin(math.pi / 40 * (x - y_shift)))

if 0 <= sx < width and 0 <= sy < height:

result[y, x] = image[sy, sx]

else:

result[y, x] = np.array([255, 255, 255])

You can find the full source code for the captcha generation script in the repository I mentioned before.

Example of an original captcha image:

Step 1. Cleaning and splitting images into segments

I converted the original images to grayscale, inverted them and cleaned up the JPEG dirt.

import numpy as np

import cv2

image: np.ndarray = cv2.imread('captcha.jpg')

image = 255 - cv2.cvtColor(image, code=cv2.COLOR_BGR2GRAY)

image = cv2.threshold(image, thresh=58, maxval=0, type=cv2.THRESH_TOZERO)[1]

cv2.normalize(image, dst=image, alpha=0, beta=255, norm_type=cv2.NORM_MINMAX)

Cleaned image:

Now I need to separate text and noise. Even the smallest symbol as little "c" has at least more than 120 enabled pixels. I found all groups of connected pixels, and selected groups containing more than 120 pixels and positioned in the middle of images.

import skimage as ski

import cv2

bin_image: np.ndarray = cv2.threshold(image, thresh=0, maxval=1, type=cv2.THRESH_BINARY)[1]

blobs_labels: np.ndarray = ski.measure.label(bin_image, background=0, connectivity=1)

segments: list[np.ndarray] = []

for x in range(0, blobs_labels.shape[1], 4):

for y in range(blobs_labels.shape[0] // 3, blobs_labels.shape[0] // 3 * 2, 4):

blob_num: int = int(blobs_labels[y, x])

if blob_num:

segment: np.ndarray = image * (blobs_labels == blob_num)

image -= segment

if np.count_nonzero(segment) >= 120:

segments.append(segment)

Ensure you use the same cleaning and splitting methods in both training and production to maintain consistency.

In this kind of captcha some symbols (graphic representation of letters usually) may stick together. I saved all these parts of images separated by symbols as gray images into separate files in PNG format and named them segments.

cv2.imwrite(

filename='filename.png', img=segment, params=[cv2.IMWRITE_PNG_COMPRESSION, 9]

)

Example of segments:

Stick together symbols:

Algorithms like Watershed or Soaking often split the image along the yellow line rather than in the right place. This happens because the symbols are corrupted by noise, and even if we fill the holes in the symbols, it will not help. There is no easy way to separate them, so I decided not to separate these glued symbols.

Step 2. Segment Length Recognizer

At this point, the data was ready for training the first model, designed to determine the segment length in characters. Machine learning offers various models, including classifiers and regression models. Classifiers analyze data (called "features") and identify one of the predefined classes during the training step. This capability perfectly suited my need to detect the class of a segment and interpret it as a length. After comparing several classifier models, I chose the Support Vector Classifier (SVC) with the default gamma setting, as it demonstrated good prediction accuracy and was easy to use.

Training the classifier required two components: a list of image data and a list of corresponding classes. The image data needed to be in flat arrays (one-dimensional), with all arrays of the same size. The classes were represented as integers within a specific range, corresponding to the segment lengths, which could vary from 1 to 7 characters. Therefore, the classes ranged from 0 to 6.

For convenience, I sorted the segments by width and selected a few hundred segments of each class. Training the model on this small sample of data enabled it to automatically sort the remaining segments quite accurately.

To prepare the data, I loaded the image data, resized all images to the same dimensions, and flattened them into one-dimensional arrays. I then collected the training data and classes into the "features" and "targets" variables.

Example of "features" content:

array([[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

...,

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0]], dtype=uint8)

Example of "targets" content:

array([5, 5, 5, ..., 0, 0, 0], dtype=uint8)

With this data I trained the classifier.

from sklearn import svm

clf = svm.SVC(gamma='scale') # Support Vector Classifier

clf.fit(X=features, y=targets)

With this classifier, I was able to sort all segments automatically. However, to ensure accuracy, I visually inspected the sorted images. The quality of the training data is crucially important; good training data leads to more accurate models and reliable predictions.

At this time I split all segment images into training and testing parts and "fit" the classifier again.

from sklearn import svm, metrics

from sklearn.model_selection import train_test_split

x_train, x_test, y_train, y_test = train_test_split(

futures, targets, test_size=0.1, shuffle=True, stratify=targets

)

clf = svm.SVC(gamma='scale') # Support Vector Classifier

clf.fit(X=x_train, y=y_train)

After the training process, I tested the model. Testing the model helps to detect failed predictions and assess the accuracy of the predictions. It also helps to identify the classes that have more failed predictions than others.

I used the testing part to create a confusion matrix. I found this accuracy test informative and clear.

import matplotlib.pyplot as plt

classes = tuple('1234567')

predicted: np.ndarray = clf.predict(x_test)

disp: metrics.ConfusionMatrixDisplay = metrics.ConfusionMatrixDisplay.from_predictions(

y_true=[classes[a] for a in y_test],

y_pred=[classes[a] for a in predicted],

labels=[classes[a] for a in set(y_test)],

normalize='true'

)

disp.figure_.suptitle('Confusion Matrix')

plt.show()

Confusion matrix:

Here I saw some problems with recognizing 4 and 6 letter segments. 4 letter segments were recognised as 3 and 5 letter segments 16 percent of the time. 6 letter segments were recognised as 5 or 7 letter segments 28 percent of the time. But since this type of segment is quite rare, this recognition error will have a small impact on the final result.

From here on you will see low recognition accuracy in some cases, I intentionally left it as is, so as not to complicate the article and offer the reader to try to improve the results on their own. I will mention some approaches to improvement at the end of the article in the "conclusion" section.

Tip: When training a model, try downsizing the images and comparing the accuracy scores. This often makes the models smaller and faster without losing accuracy. As an example, I used segment images downsampled by a factor of four to train this model.

image = cv2.resize(

image, dsize=None, fx=0.25, fy=0.25, interpolation=cv2.INTER_NEAREST_EXACT

)

At this step I got the following information for every captcha: text, segment indexes and segment lengths. Using this I found text of every segment.

E.g.: If the captcha "yxycgwc" was split into five segments: "1/yxycgwc-0…", "1/yxycgwc-2…", "1/yxycgwc-3…", "2/yxycgwc-1…" and "2/yxycgwc-4…", that means you can rename these segments to "1/y…", "1/c…", "1/g…", "2/xy…" and "2/wc…".

Symbol Recognizer

Because of glued symbols, the recognition accuracy was low, so I decided to train special models to recognize characters in different cases: single symbol, first in a segment, middle in a segment and last symbol in a segment. The processing pipeline was designed as follows: split the clean image, process segments containing a single symbol with the "single" model, and split segments with two or more symbols for processing by the other models: "first", "last" and "middle". This approach improves the overall accuracy due to better recognition of clean characters.

When the images were labeled with letters, I collected statistics about the width of the symbols:

| a | b | c | e | g | h | k | m | o | p | r | s | t | w | x | y

min | 17 | 19 | 18 | 18 | 20 | 19 | 19 | 17 | 17 | 20 | 17 | 17 | 15 | 19 | 21 | 18

max | 37 | 41 | 37 | 35 | 38 | 38 | 38 | 42 | 36 | 39 | 36 | 33 | 36 | 39 | 36 | 38

avg | 26 | 29 | 27 | 26 | 28 | 28 | 27 | 32 | 25 | 28 | 26 | 24 | 24 | 28 | 29 | 28

1% | 18 | 21 | 19 | 19 | 21 | 20 | 21 | 23 | 18 | 20 | 18 | 18 | 16 | 20 | 21 | 20

99% | 34 | 39 | 34 | 34 | 37 | 36 | 35 | 41 | 34 | 37 | 35 | 31 | 34 | 37 | 36 | 37

And height of the symbols:

| a | b | c | e | g | h | k | m | o | p | r | s | t | w | x | y

max | 34 | 45 | 34 | 33 | 44 | 47 | 44 | 34 | 32 | 43 | 35 | 36 | 40 | 34 | 34 | 47

99% | 32 | 43 | 32 | 31 | 43 | 43 | 43 | 34 | 31 | 43 | 33 | 33 | 39 | 33 | 33 | 45

I used these statistics for cutting symbols from images. Additionally, these statistics could be used for some optimization processes I will talk about later.

Step 3. Single symbols

Based on the width and height statistics, I decided to use an image size of 40x45. So, I read single letter images, resized them to 40x45 and trained the classifier.

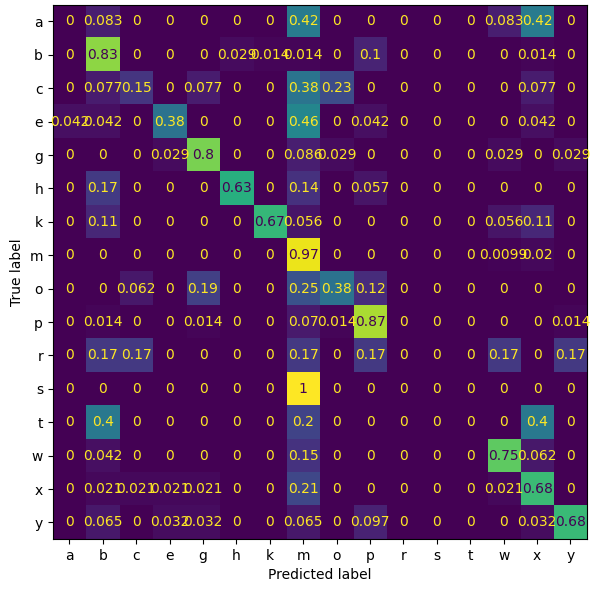

Confusion matrix:

Step 4. First symbol in segment

For training this classifier I used segments with two or more symbols. I read them, cutted 40 pixels from the left side, resized height to 45 pixels, flattened these images and trained the model.

Cutting first symbols:

Confusion matrix:

Step 5. Last symbol in segment

I repeated the previous process but took 40 pixels from the right side of segments.

Cutting last symbols:

Confusion matrix:

Step 6. Middle symbols in segment

Tip: For recognizing middle symbols, I used a classifier again to keep this article simpler. However, regression models might be a better fit for this task.

For this model I used segments with three or more symbols. I splitted segments based on average symbol width and cut 40x45 images.

Cutting middle symbols:

Confusion matrix:

I got very poor prediction accuracy and tried to reduce the cutting frame to improve the result. I cutted 20x45 images and retrained the model.

With 20x45 images I got acceptable results. This is because the neighbouring symbols had less influence on the training data.

Step 7. Captcha reader

At this step, I combined the functions of captcha cleaning, segmentation and character separation, and classifier models into one application.

Here is the application algorithm:

When I developed the application, I tested it on a thousand newly labeled captchas. After processing them with the app, I calculated the success rate, which showed that the application correctly recognized 75% of the captchas.

Conclusion

Future improvements may include increasing the dataset size, experimenting with more advanced ML models, narrower model specialization, more accurate image denoising, and improving the image segmentation process to handle glued characters more efficiently. By continuing to iterate and improve on these methods, it is possible to create much more accurate and robust captcha resolvers.

If you can achieve the result using standard algorithms without machine learning models, use algorithms. For example: if the segment width is less than 25…27 pixels, be sure that it is one character, because two characters will not fit in this image, but the models can make mistakes even in this obvious case.

Acknowledgments

I would like to express my sincere gratitude to Alexander for his invaluable help and support during the writing of this article.

Comments

Post a Comment